Table of contents

I. Introduction:

The purpose of the blog post:

This blog post is here to help candidates looking for infrastructure automation and cloud engineering jobs. If you're one of them, you know how important it is to have a good grasp of Terraform - a widely used Infrastructure as Code (IaaC) tool that allows you to provision, manage, and scale cloud resources. However, preparing for a Terraform interview can be overwhelming, which is why we've created this guide to walk you through the most common and critical interview questions you may encounter. Our goal is to equip you with the necessary knowledge and confidence to ace your Terraform interview and land that dream job! These questions cover a broad range of topics related to Terraform and can help evaluate a candidate's understanding of Terraform concepts, syntax, and best practices.

Overview of Terraform and its importance in infrastructure automation:

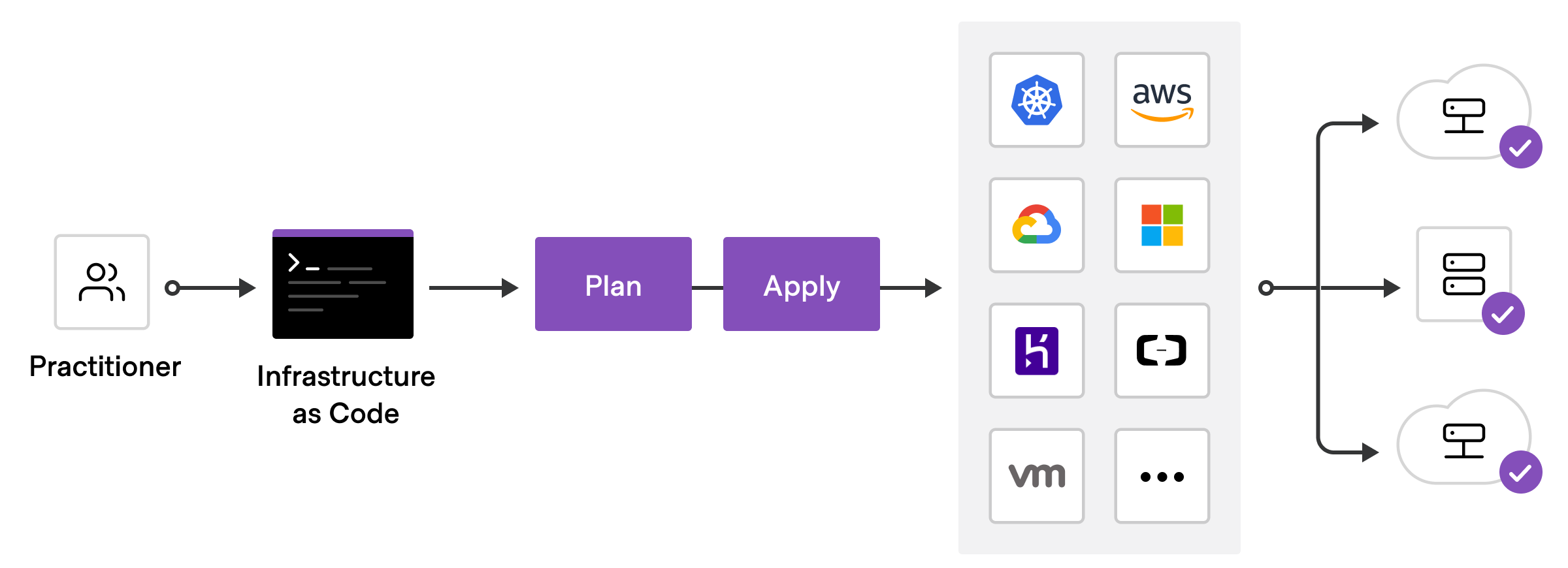

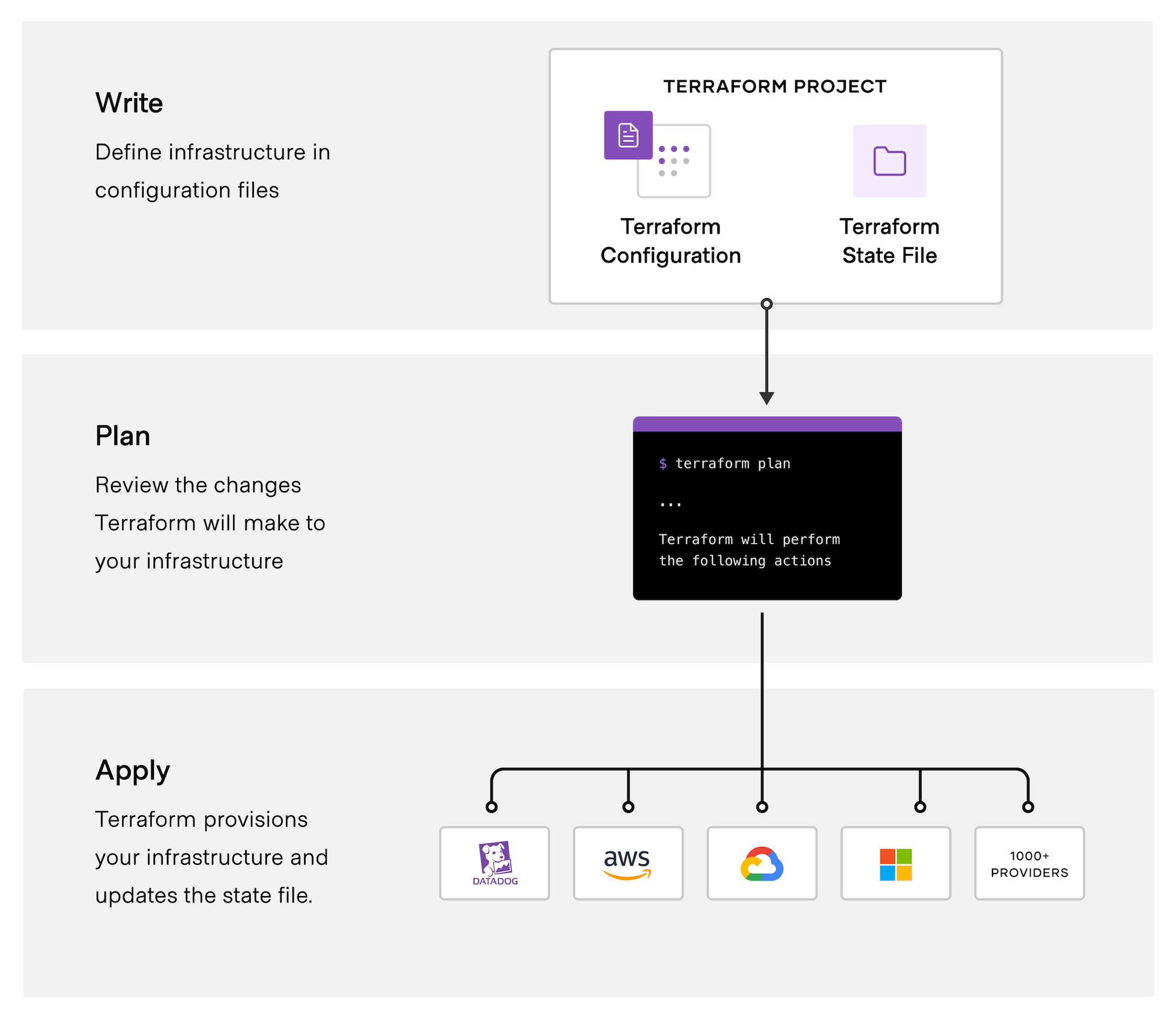

Terraform is a powerful tool that allows companies to automate their infrastructure management. It lets you use code to define and manage cloud resources, which means you can easily create, update, or delete resources across different cloud providers. This eliminates the need for manual configuration, which reduces the risk of human error and saves time.

With Terraform, you can easily provision resources like virtual machines, load balancers, and databases on popular cloud platforms like AWS, Azure, and Google Cloud. You can also automate tasks like scaling, updates, and backups. By doing so, teams can focus on more critical tasks that drive business value, like developing new features or improving customer experiences.

One of the most significant benefits of Terraform is that it allows for collaboration between team members. By using version control systems like Git, infrastructure code can be managed and reviewed in a similar way to application code. This promotes best practices like code reviews, testing, and continuous integration/continuous deployment (CI/CD), which can lead to more efficient and reliable infrastructure management.

II. The Must-Know Terraform Interview Questions:

1. What is Terraform and how it is different from other IaaC tools?

Terraform is a tool that allows you to define and manage cloud resources using code. It's different from other Infrastructure as Code (IaaC) tools because it supports multiple cloud providers and allows for collaboration between team members using version control systems like Git. Terraform also enables you to preview changes before applying them, reducing the risk of errors and making it easier to manage infrastructure at scale.

2. How do you call a main.tf module?

In Terraform, the main configuration file is typically named main.tf. To call this module, you simply need to place the configuration code in a file named main.tf in the root directory of your Terraform project. When you run the terraform apply command, Terraform will automatically recognize and execute the code in the main.tf file. If you have multiple configuration files, you can use the terraform init command to initialize the project and specify the location of the files using the -backend-config flag.

3. What exactly is Sentinel? Can you provide a few examples that we can use for Sentinel policies?

Sentinel is a policy-as-code framework developed by HashiCorp that is used to enforce compliance and governance policies in the Terraform workflow. It allows you to define custom policies and rules that ensure infrastructure deployments adhere to specific requirements, such as security, compliance, or operational standards.

Here are a few examples of how Sentinel can be used for policies in the Terraform workflow:

Enforce resource naming conventions: With Sentinel, you can create policies that enforce specific naming conventions for resources, such as ensuring that all resources are prefixed with a specific identifier.

Enforce security policies: You can use Sentinel to define security policies that ensure resources are configured securely, such as enforcing the use of encrypted data storage or enforcing specific access control requirements.

Enforce cost management policies: You can use Sentinel to define policies that ensure resources are configured in a cost-effective way, such as limiting the size of resources or enforcing specific pricing tiers.

Enforce compliance policies: Sentinel can be used to define policies that ensure infrastructure deployments comply with specific regulations or standards, such as HIPAA or PCI-DSS.

By using Sentinel policies, teams can ensure that infrastructure deployments are compliant, secure, cost-effective, and consistent with company standards, ultimately reducing the risk of security breaches and operational issues.

4. You have a Terraform configuration file that defines an infrastructure deployment. However, there are multiple instances of the same resource that need to be created. How would you modify the configuration file to achieve this?

To create multiple instances of the same resource using Terraform, you can use the "count" parameter. This allows you to specify how many instances of a resource you want to create.

For example, let's say you want to create three EC2 instances. You can define the resource block for the EC2 instance as usual, but add a "count" parameter with a value of 3:

resource "aws_instance" "ec2_instance" {

count = 3

ami = "ami-0c55b159cbfafe1f0"

instance_type = "t2.micro"

}

This will create three EC2 instances with the same configuration.

5. You want to know from which paths Terraform is loading providers referenced in your Terraform configuration (*.tf files). You need to enable debug messages to find this out. Which of the following would achieve this?

A. Set the environment variable TF_LOG=TRACE

B. Set verbose logging for each provider in your Terraform configuration

C. Set the environment variable TF_VAR_log=TRACE

D. Set the environment variable TF_LOG_PATH

Set the environment variable TF_LOG=TRACE. This will enable debug messages in the Terraform CLI and display information on where Terraform is loading providers from.

TF_LOG have a different level of log levels (in order of decreasing verbosity)TRACE, DEBUG, INFO, WARN or ERROR

6. Below command will destroy everything that is being created in the infrastructure. Tell us how would you save any particular resource while destroying the complete infrastructure.

terraform destroy

To save a particular resource from being destroyed along with the rest of the infrastructure, you can use the "terraform state" command to identify the resource.

First, use the "terraform state list" command to get the list of all resources managed by Terraform:

terraform state list

This will give you a list of all the resources that Terraform is managing.

Next, identify the resource that you want to keep and then use other resource names in the "terraform destroy" command with the "-target" flag. For example, if you want to save a resource named "example_resource1", from the two resources example_resource1 & example_resource2:

terraform destroy -target=example_resource2

This will destroy example_resource2 except the "example_resource1" resource.

7. Which module is used to store .tfstate file in S3?

The module used to store Terraform state files in an S3 bucket is called "terraform-backend-aws-s3". This module enables you to store your Terraform state files in an S3 bucket, which is a secure and durable object storage service provided by AWS. Using this module, you can easily create an S3 bucket to store your state files, configure versioning for the bucket, and set up encryption for your data. By storing your state files in an S3 bucket, you can ensure that your infrastructure is managed in a more secure and reliable way, with built-in backup and restore functionality.

8. How do you manage sensitive data in Terraform, such as API keys or passwords?

Managing sensitive data in Terraform is important to ensure that credentials and other sensitive information are not exposed. One way to manage sensitive data is by using environment variables to store sensitive data outside of the Terraform configuration. These environment variables can then be referenced in the Terraform configuration using the ${var.NAME} syntax.

Another option is to use the Terraform Vault provider, which is a secrets management tool that integrates with Terraform. With this provider, secrets can be securely stored in Vault and retrieved in the Terraform configuration using the Vault provider.

It is also possible to use third-party secrets management tools like HashiCorp Vault, AWS Secrets Manager, or Azure Key Vault to store and manage sensitive data. These tools offer encryption, access control, and audit logging capabilities to protect sensitive data.

9. You are working on a Terraform project that needs to provision an S3 bucket, and a user with read and write access to the bucket. What resources would you use to accomplish this, and how would you configure them?

To provision an S3 bucket and a user with read and write access to the bucket in Terraform, we would use the following resources:

aws_s3_bucketresource to create the S3 bucket.aws_iam_userresource to create an IAM user.aws_iam_access_keyresource to create access keys for the IAM user.aws_iam_user_policy_attachmentresource to attach a policy to the IAM user.

Here's an example configuration that would create an S3 bucket, an IAM user, access keys, and a policy that grants read and write access to the bucket:

# Create S3 bucket

resource "aws_s3_bucket" "my_bucket" {

bucket = "my-bucket"

}

# Create IAM user

resource "aws_iam_user" "my_user" {

name = "my-user"

}

# Create access keys for IAM user

resource "aws_iam_access_key" "my_user_access_key" {

user = aws_iam_user.my_user.name

}

# Attach a policy to the IAM user that grants read and write access to the S3 bucket

resource "aws_iam_user_policy_attachment" "my_user_policy_attachment" {

policy_arn = "arn:aws:iam::aws:policy/AmazonS3FullAccess"

user = aws_iam_user.my_user.name

}

This configuration creates an S3 bucket named my-bucket, an IAM user named my-user, access keys for the user, and a policy that grants full access to the S3 bucket. The IAM user can then use the access keys to read from and write to the S3 bucket.

10. Who maintains Terraform providers?

Terraform providers are maintained by the respective cloud providers or service owners. For example, AWS maintains the Terraform provider for AWS services, Microsoft maintains the Terraform provider for Azure services, and so on. However, the Terraform community also contributes to the development and maintenance of providers through open-source contributions. The official Terraform provider registry also provides a way for third-party providers to be published and maintained by the community.

11. How can we export data from one module to another?

In Terraform, you can export data from one module and use it in another module using the output and module blocks.

First, in the module where you want to export data, define the output value you want to export using the output block. For example, to export the ID of an AWS VPC, you can define the following output block in the VPC module:

output "vpc_id" {

value = aws_vpc.vpc.id

}

Then, in the module where you want to import this data, use the module block to refer to the module where the output value is defined. For example, to import the VPC ID from the VPC module, you can use the following module block in the subnet module:

module "vpc" {

source = "./vpc"

# ...

}

resource "aws_subnet" "subnet" {

vpc_id = module.vpc.vpc_id

# ...

}

Here, the module.vpc.vpc_id expression refers to the vpc_id output defined in the VPC module, which is exposed through the module block in the subnet module. This allows the subnet module to use the VPC ID value from the VPC module.

III. Conclusion

Importance of being prepared for Terraform interviews:

Terraform is a powerful tool for infrastructure automation that is becoming increasingly popular among DevOps and infrastructure teams. As such, it is important to be prepared for Terraform interviews if you are seeking a job in this field. Here are some reasons why being prepared for Terraform interviews is important:

Demonstrates your knowledge: Being prepared for Terraform interviews demonstrates that you have a good understanding of the tool, its capabilities, and how it can be used to automate infrastructure. This will make you stand out from other candidates who may not be as familiar with Terraform.

Shows your problem-solving skills: Terraform is a tool that requires problem-solving skills to use effectively. Being prepared for Terraform interviews shows that you have these skills and can use them to solve complex infrastructure problems.

Helps you anticipate interview questions: Being prepared for Terraform interviews can help you anticipate common interview questions and prepare thoughtful responses. This will make you appear more confident and competent during the interview.

Improves your chances of getting the job: Being prepared for Terraform interviews can significantly improve your chances of getting the job. Employers want to hire candidates who are knowledgeable and capable of working with the tools and technologies they use in their organization.

In summary, being prepared for Terraform interviews is essential if you want to succeed in a DevOps or infrastructure role. It demonstrates your knowledge, and problem-solving skills, and can significantly improve your chances of getting the job.

Final thoughts and additional resources for further study:

In conclusion, Terraform is a powerful tool for infrastructure automation, and being prepared for Terraform interviews is essential for landing a job in this field. It's important to have a solid understanding of Terraform's core concepts, including the Terraform workflow, modules, and providers. Additionally, having hands-on experience with Terraform and being able to showcase your skills through projects is a great way to stand out in the interview process.

For further study, there are many resources available online, including official documentation, tutorials, and courses. Some recommended resources include:

Terraform Up & Running by Yevgeniy Brikman: terraformupandrunning.com

Terraform Associate Certification: hashicorp.com/certification/terraform-assoc..

Official Terraform documentation: terraform.io/docs/index.html

Terraform tutorials and examples: learn.hashicorp.com/terraform

Terraform certification program: hashicorp.com/certification/terraform-assoc..

Terraform modules on the Terraform Registry: registry.terraform.io

HashiCorp Community Forum: discuss.hashicorp.com/c/terraform-core/9

Terraform training courses on Udemy: udemy.com/topic/terraform

Terraform videos on YouTube: youtube.com/playlist?list=PLy7NrYWoggjw0GpI..

Terraform blog on HashiCorp website: hashicorp.com/blog/tag/terraform

Finally, don't forget to check out Shubham Londhe Terraform playlist on YouTube, which can be found at the following link: youtube.com/watch?v=rnNkRwD2yEc&list=PL...

By dedicating time and effort to learning and practicing Terraform, you can increase your chances of success in Terraform interviews and advance your career in infrastructure automation.

#Terraform #IaC #InfrastructureAsCode #TerraformInterview #TerraformModules #TerraformConfiguration #TerraformResources #TerraformProviders #TerraformState #TerraformCommands #TerraformBestPractices #TerraformTips #TerraformLearning #TerraformCertification #HashiCorp #CloudInfrastructure #AWS #Azure #GoogleCloudPlatform #DevOps